With Amazon’s penetration into tier-2 and tier-3 cities in India and other similar geographies, such as the UAE, Egypt and Brazil where addresses do not follow a standard structure, correctly predicting customer locations (i.e., geocodes) is challenging and crucial to delivering packages successfully. We achieve this through an India first solution that effectively leverages historical delivery data and state-of-the-art technologies, such as deep metric learning and geo-indexing.

Motivation

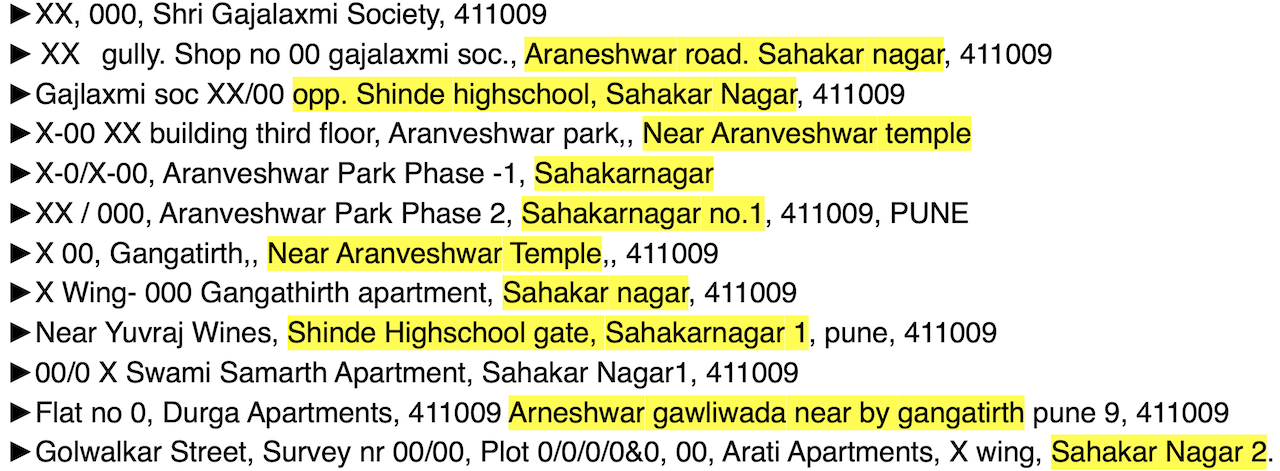

Amazon aims to optimize the delivery experience of both customers and delivery associates when packages travel from the final delivery stations to customer doorsteps. Customers provide information regarding their whereabouts through the address text. It is infeasible to manually map addresses to locations given the large scale. Learning locations from this free-form input is challenging in emerging geographies due to lack of standardisation in address text in form of spelling variations, missing components, vernacular content, synonyms and abbreviations. Figure 1 presents a glimpse into a small 200m x 200m area from Sahakar Nagar locality in Pune city of India. It illustrates huge variations in the mention of localities and streets along with lack of a common structure across customers.

Figure 1. Few anonymised addresses from a 200m x 200m area of Sahakar Nagar, Pune. Specific information in address text is anonymised to preserve customer privacy.

Learning delivery locations can be a trivial task when either 1) the geography follows a fixed format for writing addresses, making it easy to look-up the address against a reference data set; or 2) sufficient number of historical deliveries have been made to the address. With Amazon’s penetration into tier-2 and tier-3 cities in India and other similar geographies, we face a unique challenge posed by the combination of loosely-structured addresses and higher proportion of new-to-Amazon customers.

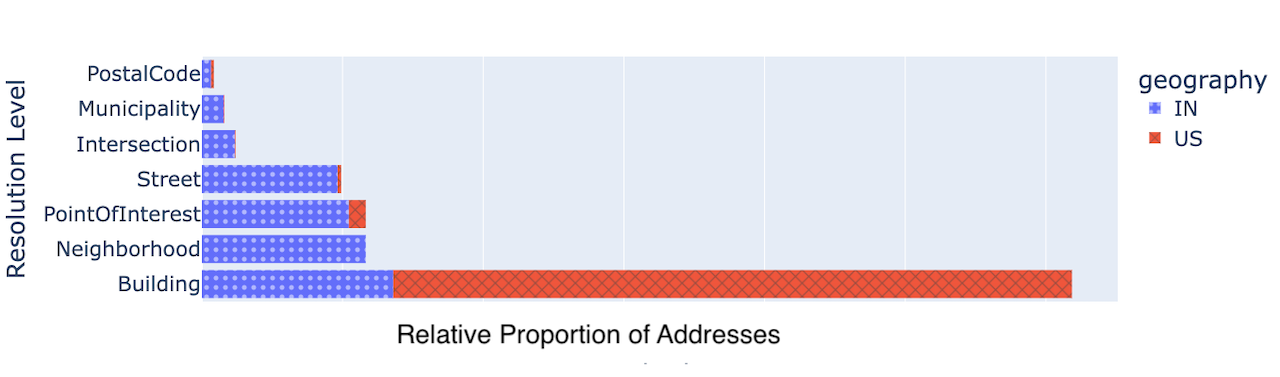

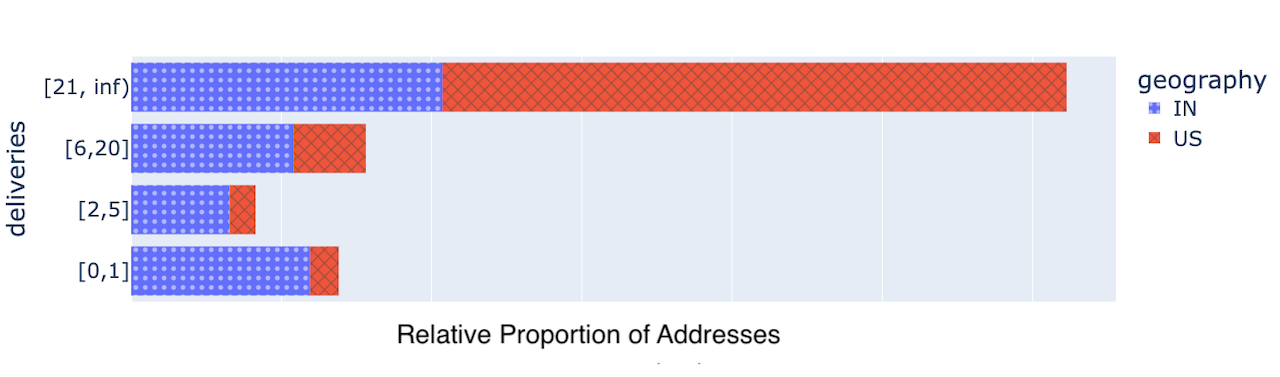

Figure 2 shows a graphical comparison of relative proportions of addresses resolvable at different levels using a popular maps service, HERE, for geographies with structured and unstructured addresses. Figure 3 shows another comparison of the same cohorts with historical successful delivery counts.

Learning neighbourhoods

In order to automatically learn delivery locations for loosely-structured addresses at scale, we design a novel solution which leverages historical delivery information to learn semantic similarity in address text. We make use of state-of-the-art technologies, such as deep metric learning, geo-indexes and transformers. Note that in the scope of this work, we are not trying to build a delivery planning and route optimization system which would require breaking up a large region into non-overlapping zones and map customers/drivers to them. We rather focus on predicting location for individual customers, which is a useful input for multiple delivery planning and optimisation tasks. We adopt a bottoms-up approach where we do not pre-define neighbourhoods and learn it in a lazy but low latency manner given an address. This design choice provides us good flexibility and allows to scale to any new geography without being affected by local physical nuances. This solution not only helps Amazon to deliver efficiently in less structured geographies, but also enables delivery defect reduction in other geographies (including NA and EU regions) for the proportion of traffic impacted by noisy addresses.

With a carefully designed training strategy, which uses open source Uber’s H3 indexing, our model understands that, for example, MG Road in Bengaluru city is proximal to Brigade road, and Q-city can also be referred to as Financial District in Hyderabad, even without having any lexical similarly among tokens. Once our model learns this notion of semantic similarity in addresses, it can be deployed to perform location learning and many other delivery planning tasks. We dive deep specifically into locations learning in here.

Encoding proximity among addresses

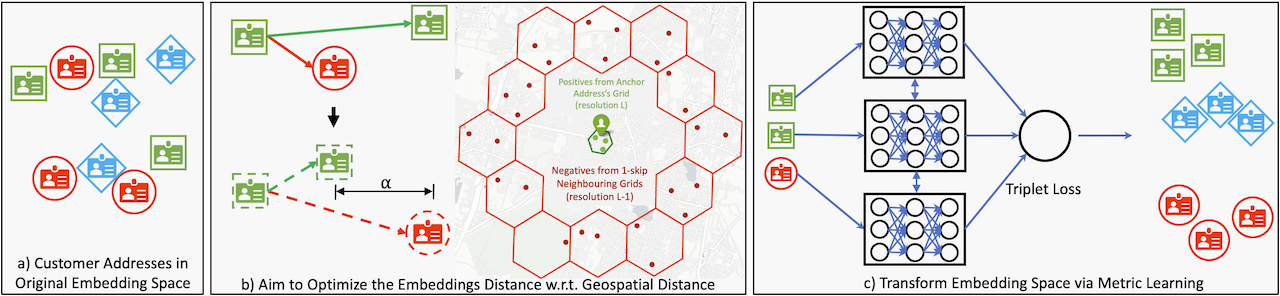

This work performs geocode prediction of a query address by aggregating geocodes of its neighbours. Thus, retrieving closest physical neighbours for the query address is of utmost importance. We use address embeddings and open source Approximate Nearest Neighbours (ANN) search techniques to perform nearest neighbours lookup. Context-insensitive (e.g., FastText) or contextualized embedding methods (e.g., RoBERTa) struggle to learn quality address embeddings as addresses do not follow a document or a paragraph like organization, rather they are related by geospatial proximity. To tackle this challenge, we introduce a deep metric learning based proximity-aware address embedding framework as illustrated in Figure 4. Address embeddings in the original embedding space (e.g., RoBERTa embeddings) are not aligned as per proximity among addresses in real world (cf. Fig. 4a). We propose a scalable approach for training triplet generation based on H3 spatial index (cf. Fig. 4b) for embedding space transformation. As a contrastive learning setup, each address embedding is extracted in isolation and transformed to mimic the real word proximity relations among the whole corpus of addresses by optimizing with a triplet margin loss (cf. Fig. 4c).

Training data generation

Supervised metric learning algorithms for classification tasks (e.g., object, face identity detection) widely use instance class labels to generate the training data. However, manually labeling the match/no-match address pairs at scale is expensive and thus we employ historical delivery geocodes to automatically generate the weakly-labeled training triplets. We propose to use H3 geospatial index to retrieve positive and negative addresses in an intelligent manner. For an address, T positive addresses are sampled from its H3 grid of level L, and T negative addresses are sampled from the ring of parent’s (i.e., level L - 1) 1-skip neighbouring grids as shown in Figure 4b.

Experiments and results

We experiment with IN and the UAE addresses in this work. For evaluation, a few weeks of out-of-time network wide shipments are considered where learnt geolocations are compared against the observed delivery GPS scans (marked by delivery associates). As our solution is targeted towards hard-to-resolve cases where the existing models perform poorly, we benchmark the current production geocoding system on the considered hard-to-resolve test set and report relative improvements over it (refer to 1 for further details around datasets).

Model configurations and baselines

For a comparative analysis, we use multiple baseline models as described following: FastText (trained on address data), Transformer-General (public RoBERTa-base), and Transformer-Address (RoBERTa pre-trained on address data). Further, we have metric learning based models: Transformer-Triplet (baseline trained on triplets generated at fixed distance) and our proposed Transformer-Triplet-H3 (trained using our proposed H3 based triplet generation strategy).

Assessing Address Embedding Quality

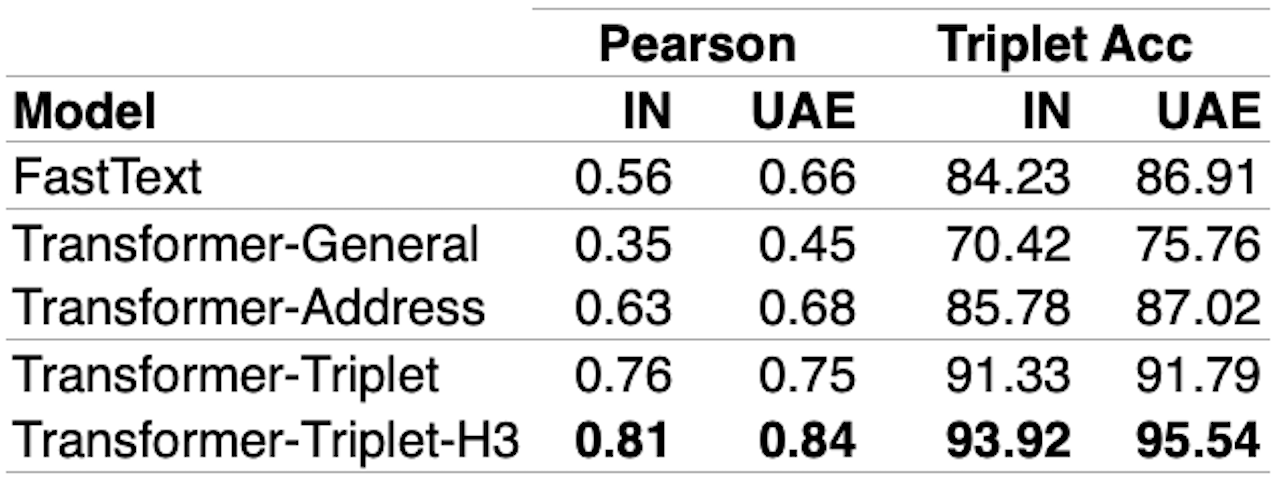

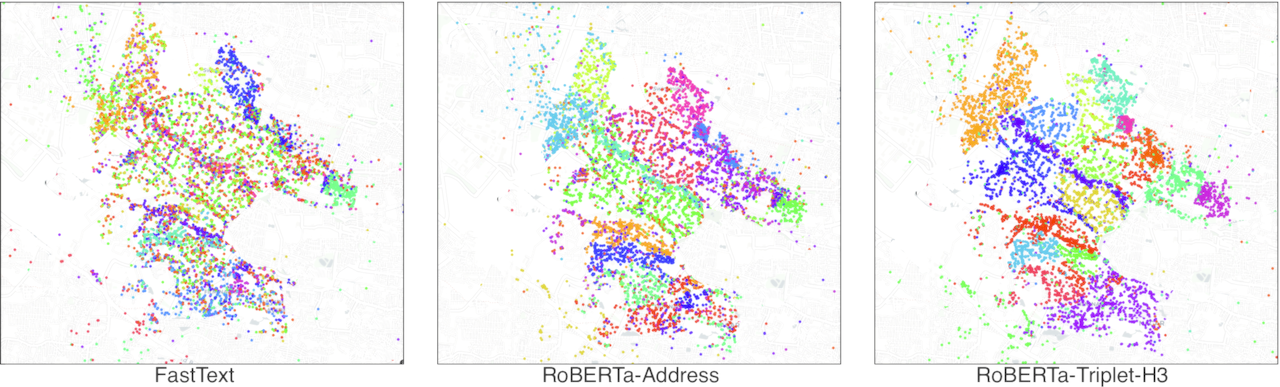

To intrinsically measure the geospatial proximity semantics captured in address embeddings (cf. Table 1), we compute Pearson correlation of embeddings cosine distance with geospatial distance. Further, we compute triplet accuracy where triplets are constrained by the condition that anchor will be geospatially closer to the positive than the negative and measure if embeddings pass the same criterion using cosine distance. We also do a qualitative analysis by clustering (K-means with K=20) addresses using their embeddings and visualizing them via their geocodes (cf. Figure 5). Better geospatially aware embeddings should result in geospatially coherent clusters. We observe that the metric learning based models outperform others because of better proximity understanding.

Geolocating hard-to-resolve addresses

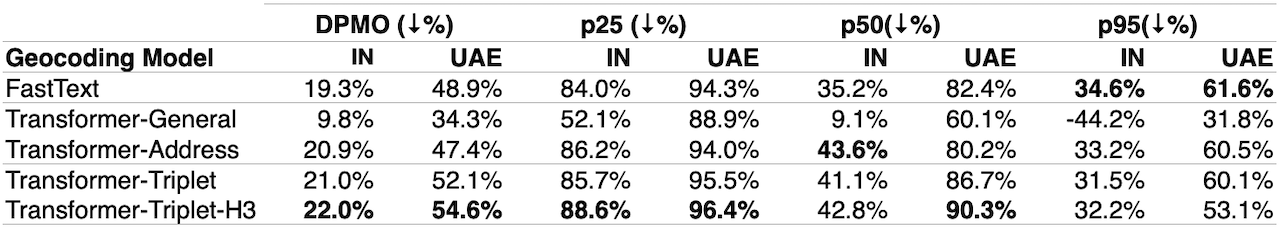

For an extrinsic evaluation, we compute neighbourhood-level geocodes via Kernel Density Estimation (KDE) score over the retrieved neighbours which can guide the drivers to a closer proximity in the absence of any better geocode. Equation 1 formulates the kernel density estimator P over the retrieved neighbours N where K (x; h) is a Gaussian kernel with haversine metric. The bandwidth h works as a smoothing parameter, controlling the tradeoff between bias and variance in the result. A large h leads to a smooth density distribution (i.e., high bias), whereas a small bandwidth results in an unsmooth density distribution (i.e., high variance). We chose h as 200 meters in our experiments after validation over 25 m to 400 m range. Table 2 presents results via various geocoding metrics relative to the production baseline on shipments for the chosen test period. Defects Per Million Opportunities (DPMO) measures the number of predictions falling beyond Y meters normalized to a million. The percentile metrics (p25, p50, p95) capture the distribution of error distances (actual vs predicted geocode) on the test set. It can be observed from Table 2 that the proposed models based on deep metric learning outperform the production baseline by a substantial margin as well as stand superior in comparison to the other baselines, i.e., FastText and basic Transformer models.

The Way Forward

In this blog, we presented an efficient metric learning based approach to facilitate capturing of geospatial proximity semantics in address embeddings. We observe quantifiable improvements in address embeddings quality and encouraging results from experiments on hard-to-resolve addresses. Our model operates solely using address text at the inference time, and is trained without any manually curated labels making it scalable across emerging geographies. In future, we will explore a pairwise cross encoder model to re-rank or filter the poorly retrieved neighbours and further enhance our negatives mining strategies. We will also explore geospatial constraints-aware neighbourhood learning (e.g., to ensure neighbourhoods do not cross water bodies and non-crossable highways).

Data science professionals working with tasks involving geospatial data (e.g., customer addresses, geocodes) can directly benefit by leveraging neighbourhood-aware address embedding. Further, our proposed approach can also be used to create address related features in cross-domain applications such as buyer fraud prediction, Point-of-Interest deduplication/recommendation and urban planning analysis.

We have utilised Python programming language and open source libraries PyTorch, HuggingFace and Annoy to prototype the above solution. For more details, please refer to our research paper published in Industry Track at EMNLP, 2022

[1] Learning Geolocations for Cold-Start and Hard-to-Resolve Addresses via Deep Metric Learning

DISCLAIMER: The views expressed in this blog are solely those of the author and do not represent the views of Amazon. The information presented in this blog is for informational and educational purposes only. The author provides no guarantees or warranties regarding the accuracy, completeness, or usefulness of any content.

Users acknowledge that any reliance on material in this blog is at their own risk. Amazon does not endorse any third party products, services, or content linked from this blog. Any links or reference to third party sites or products are provided for convenience only. Amazon is not responsible for the content, products, or practices of any third party site.

The author reserves the right to make changes to this blog and its content at any time without notice. This blog may contain the author's personal opinions, which do not necessarily reflect those of Amazon.